The State of AI Detection

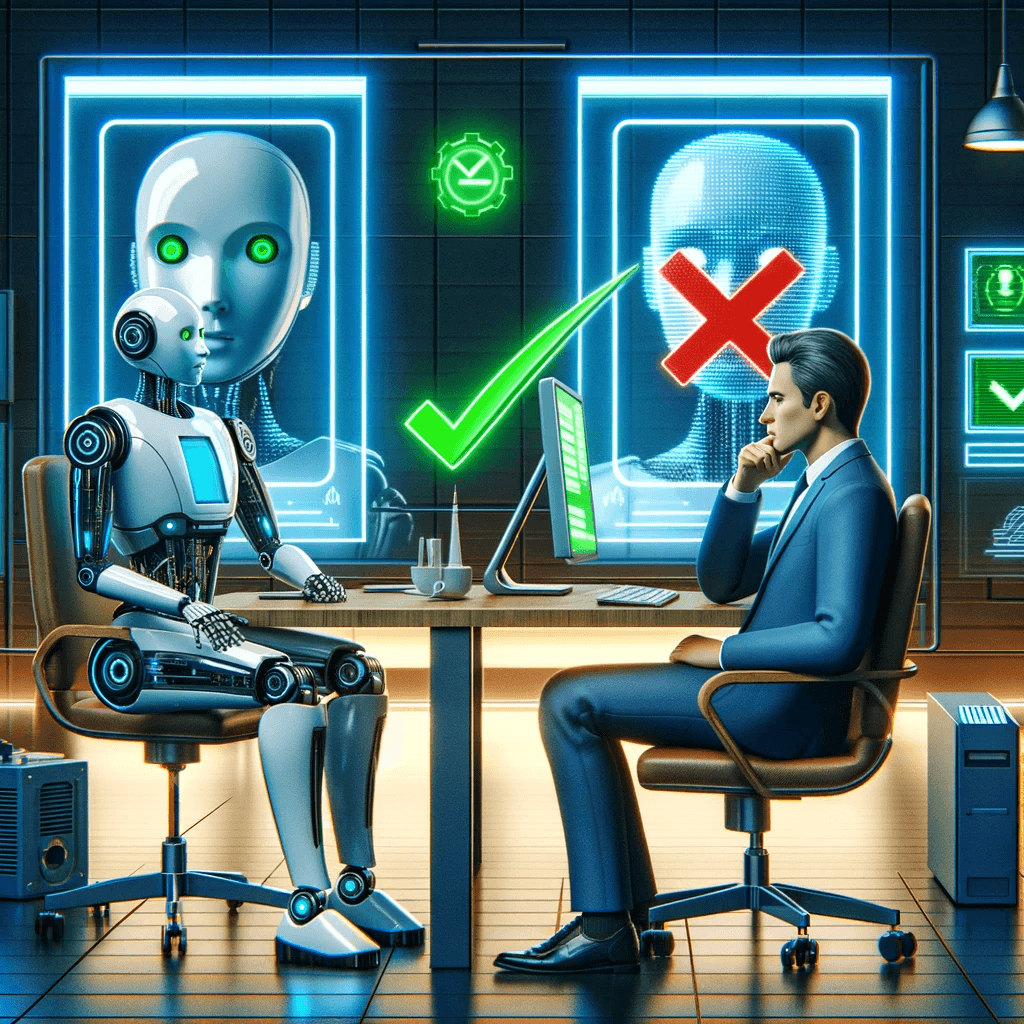

Human AI detection is no longer a viable tool. With GenAI image precision and quality improving drastically in 2023, new AI focused detection tools are necessary.

A self-proclaimed “AI Detection expert” claims to have unmasked a deepfake by counting five fingers on a politician's outstretched hand. The internet buzzes, pundits nod sagely, and the digital news cycle churns another click-worthy nugget. That was most of 2023.

As AI-generated imagery reaches astonishing new heights of realism and quality, traditional manual review methods are no longer enough. We stand at the dawn of an era defined by AI—one where both the promise and perils are exponentially greater. Although watermarks seemed a clever safeguard, open-source models can now easily bypass these, creating confusion and room for misinformation to spread. Rather than resort to ineffectual tactics, we must take a more proactive approach: fight fire with fire.

AI-powered detection represents our best hope for meeting this moment.

Where human eyes falter, algorithmic analysis prevails; while we struggle to spot manipulation in modern fakes, the right AI far surpasses our capacity with superhuman precision. These systems can rapidly process high-volumes of content, pinpointing subtle statistical anomalies imperceptible to reviewers. As fakes reach photorealistic fidelity, manual verification will inevitably fail.

We're talking neural networks trained on terabytes of data, sniffing out inconsistencies and forgeries generated with AI.

AI's Leap Forward: Perfect Fingers and Flawless Text in AI Images

As AI image synthesis leaps forward in fidelity, its uncanny ability to create realistic images of people poses an unprecedented challenge for fraud detection. Where human analysis once sufficed, the field now demands more robust (or robot?) solutions.

Modern generative models produce imagery of near-photographic integrity, successfully replicating subtle details that trip up manual review. Flawlessly proportioned hands, convincing textures, even accurate reflections—all precisely rendered by algorithms matching true visual complexity.

These advancements let AI bypass the very inspection points designed to catch them. With images, and voices, indistinguishable from reality, traditional methods falter. Our eyes are not the AI Detection tool they once were.

through multilayered analysis of statistical abnormalities beyond visible cues, sophisticated AI-verification prevails where human perception fails. By recognizing patterns and anomalies imperceptible to us, these systems identify what our eyes cannot.

Watermarking in GenAI: A Government-Endorsed Solution Exploited by Fraudsters

As generative models advance, governments scramble for solutions, eyeing watermarks as a viable defense. While watermarks seem like an intuitive safeguard, the reality is far more complex.

Open-source algorithms can’t stop developers from removing the watermarks from the outputs. By stripping watermarks from the source code, fraudsters sidestep this defense entirely.

Watermarks can muddy the waters of even genuine images. Legitimate images risk false positives when inspection systems misclassify real photos as AI-doctored. Picture a news photo of a political rally, adorned with watermarks from every major outlet. Suddenly, verifying its authenticity becomes a matter of opinion, not fact. This creates unwanted consequences, such as ‘misinformation doubt.’

Relying on watermarks creates ambiguity instead of clarity. Still, governments continue pushing fallible tactics, endorsing “easy” but exploitable fixes.

The Future of AI Detection

As the quality of AI-generated imagery continues to improve, traditional methods of human review are becoming increasingly insufficient. The days of human eyes straining over digital canvases, squinting at fingers and text in images are over. The high-fidelity nature of modern AI models makes it difficult for even trained professionals to detect AI-generated content with certainty, no matter how hard they zoom.

We, people, struggle with subtle inconsistencies, our biases cloud our judgment, and fatigue sets in faster than you hosting a holiday dinner in December. AI, on the other hand, never blinks, never gets bored, and never falls prey to confirmation bias.

There is a growing recognition that we must shift towards more sophisticated AI-based detection tools. Unlike human reviewers, AI-based detection tools can analyze content at a granular level, identifying subtle patterns and inconsistencies that are invisible to the human eye. This level of precision is essential in an age where deepfakes and other forms of AI-generated content are becoming increasingly prevalent.

This shift towards AI-based detection is not only necessary but also inevitable. As Generative AI becomes more advanced, the need for sophisticated detection tools will only increase. Embracing this shift now will enable us to stay ahead of the curve, mitigating risks and ensuring that AI is used responsibly and ethically.

AI will proceed regardless; with advanced protections, threats become catalysts rather than dealbreakers and it starts with AI Detection.