The Rise of Fake Influencers and How AI Blocker Detected the Secret Networks Gaming Instagram and Fanvue

From Higgsfield AI to $130 million " slop machine", we go inside the industrial scale content farms replacing human creators with synthetic ghosts

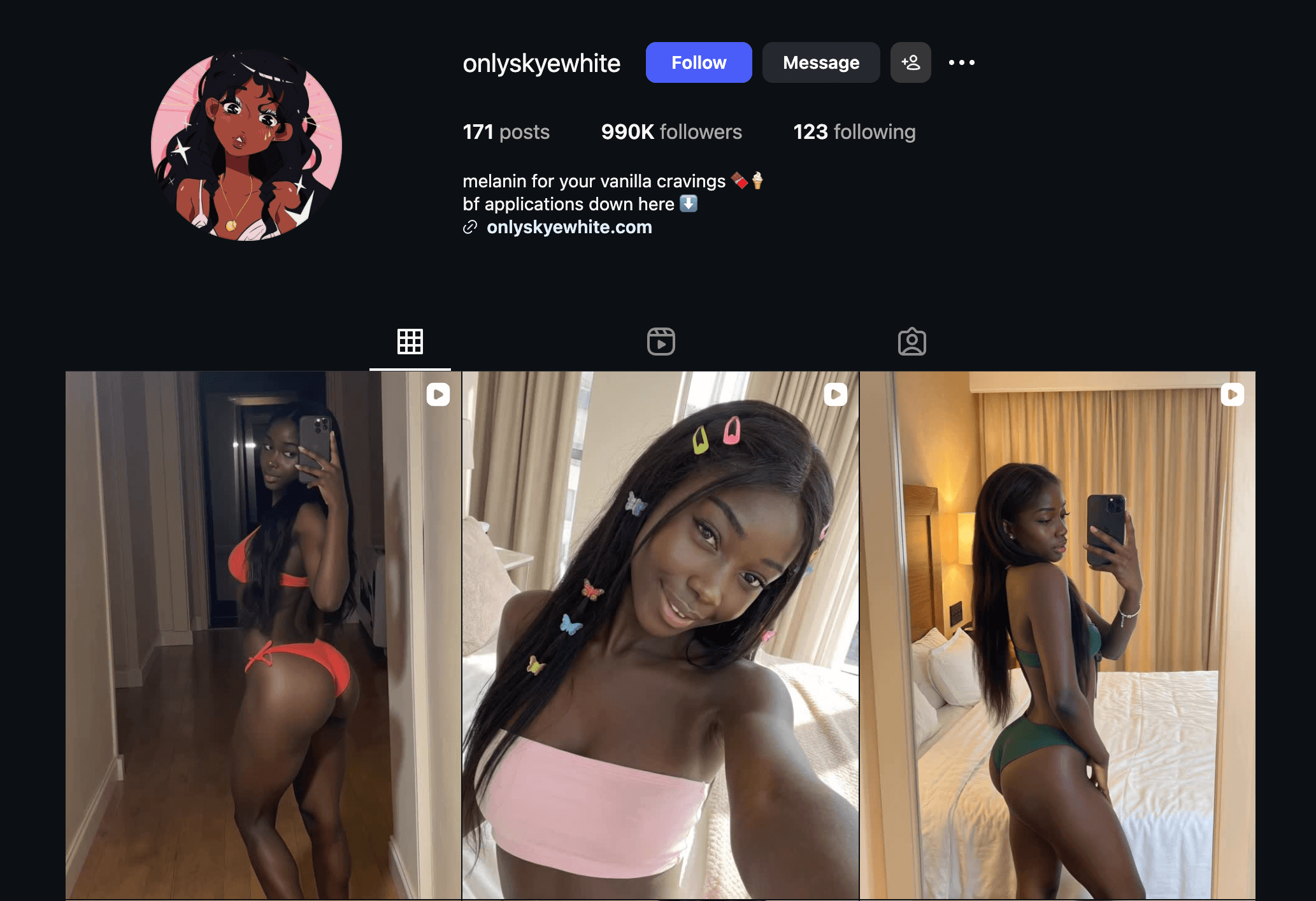

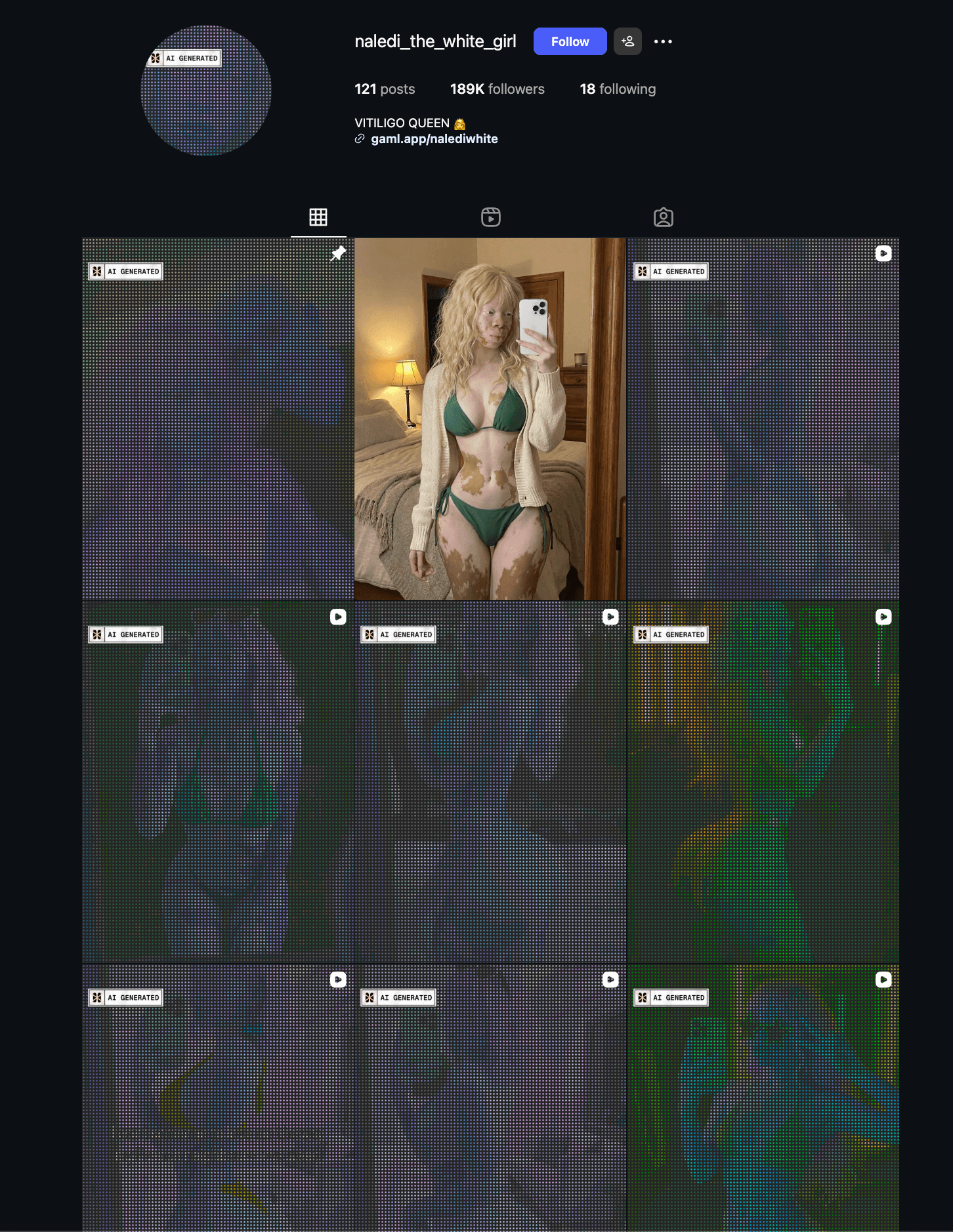

The “Dead Internet Theory” used to be a paranoid creepypasta about bots talking to bots. In 2026, it is now seen as a standard Series A pitch deck. If you have spent any time on Instagram recently, you may have discovered that your feed felt like a high definition hallucination or a fever dream. You are seeing a specific type of hyper-realistic and hyper-perfect AI model account content. They look and post like people, but they are the outputs of "Influencer Factories."

The $130 Million Slop Machine

This is not just a few bored individuals or a group overseas with a laptop. We are entering the era of industrial scale content farming fueled by Venture Capitalists. At the heart of this shift is Higgsfield AI, one of the primary tools used to generate the videos or create face swapping content for these accounts and “content creators.” Higgsfield recently raised $130 million, reaching a valuation of $1.3 billion in early 2026.

While investors and Venture Capitalists talk about “democratizing cinematic storytelling,” the reality on the ground is a flood of “slop.” These tools, alongside others like 'Wan 2.2' (known for facial swaps), 'Nano Banana 2 pro' (for editing or implementing people into the images), and 'DarLink AI' (for creating NSFW content), are all being used to mass produce personas that either do not exist or mimic a celebrity's look.

The incentives are simple, maximum output and zero human labor needed. A factory owner or creator can run a number of "models" simultaneously, 24/7, without ever paying for essentials like makeup, housing, or photography.

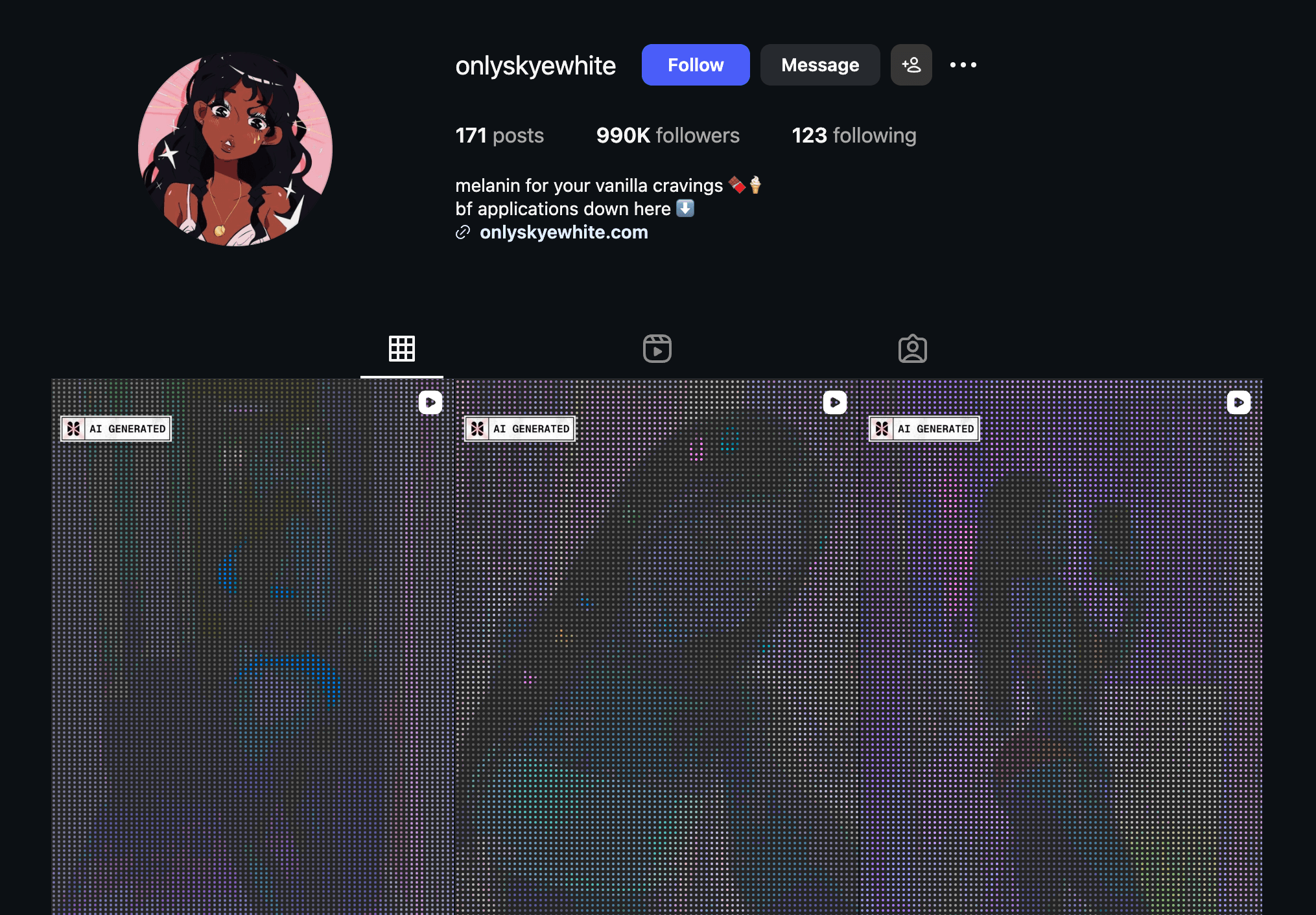

How We Caught Them: The AI Blocker Report

We did not eyeball these accounts.

We did not hunt for extra fingers or uncanny smiles.

And we did not rely on vibes.

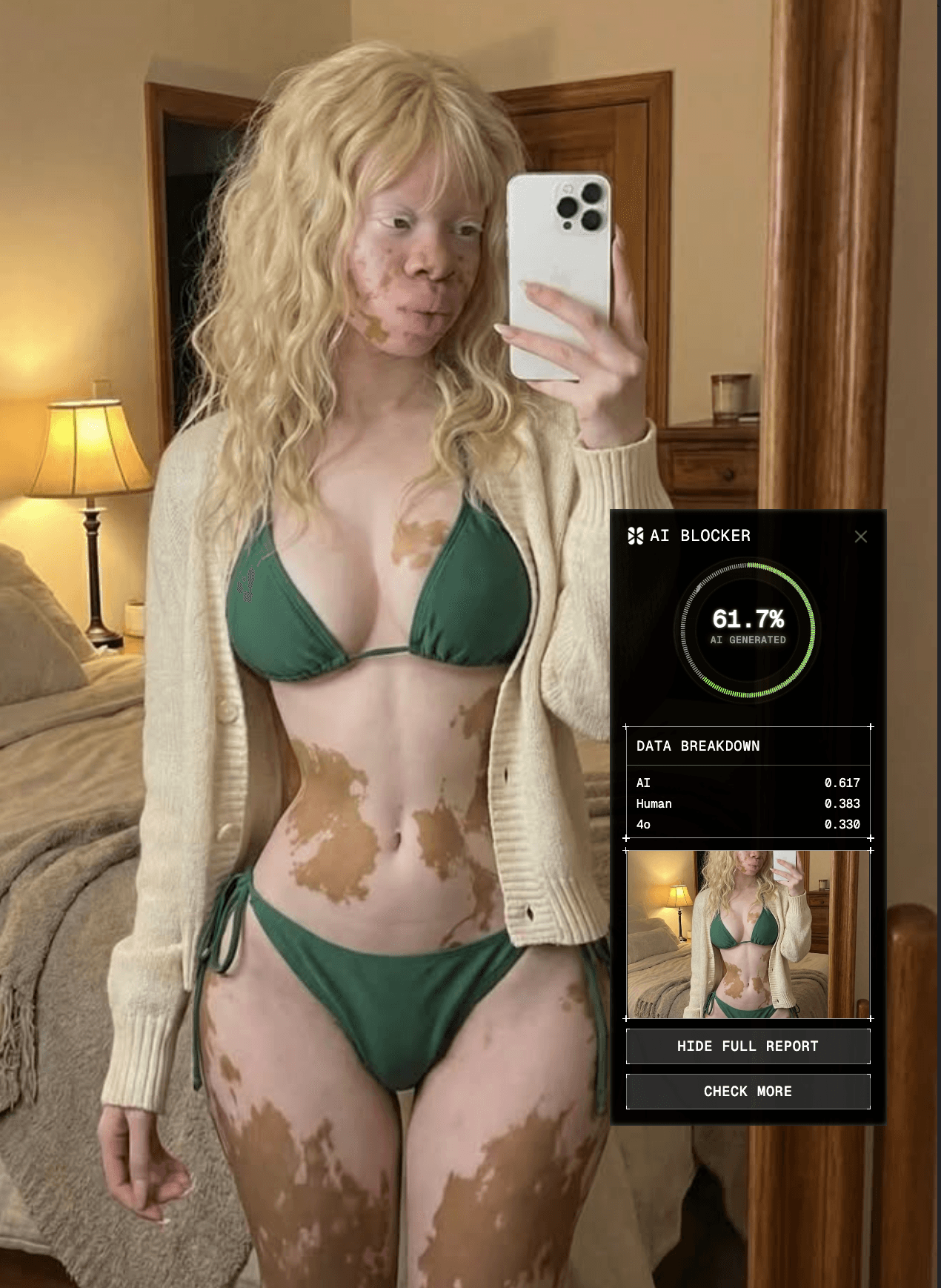

We utilized AI blocker to scan each account in real time, measuring how much of their content was AI generated as we moved through their Instagram accounts. We then went a step deeper, analyzing account metadata, location, claims, age signals, username history, posting patterns, and follower graphs. We traced who followed whom, which accounts moved together, and where their trails converged. The goal was not just to flag a fake image, but to find the factory behind it.

Platforms like Meta have little incentive to censor as long as engagement stays high. Synthetic content performs. Ads get served. Everyone gets paid. AI Blocker is built for the opposite purpose of identifying technical and behavioral fingerprints that moderators and platform policies ignore.

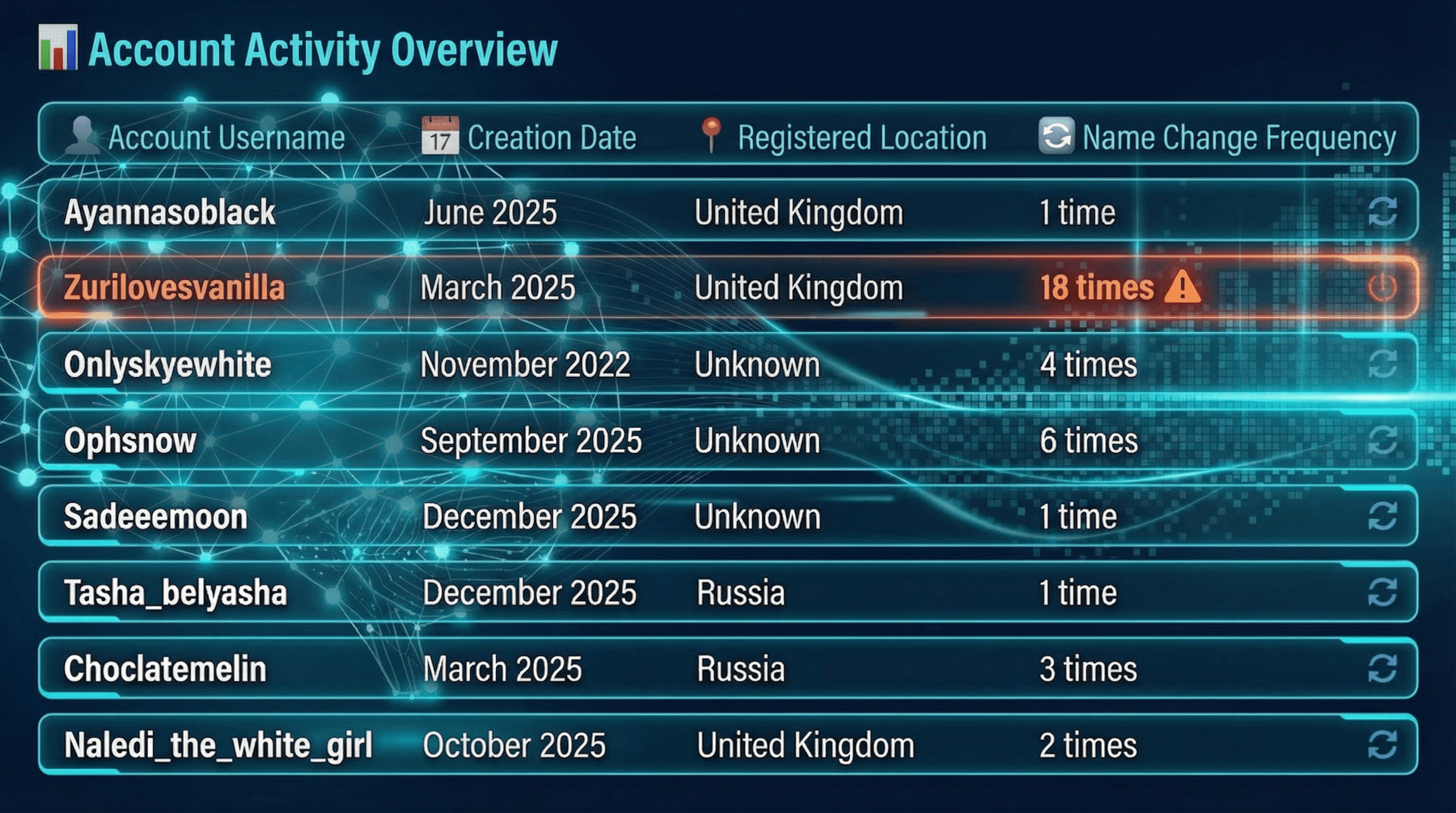

The Rebranding Loop

The account Zurilovesvanilla did not just have minor errors or inconsistencies, it had changed usernames over 18 times since March 2025. Factory operators tend to acquire aged accounts or rapidly rotate identities to probe algorithmic preference, settling on whatever niche gains traction before scaling the synthetic accounts.

Places That Aren’t Real

We ran travel photos posted by Naledi_the_white_girl through the AI or Not reverse image search to see whether these scenes had already existed online or outside of the account. Several images came back with the original photograph, leading to the telltale signs of prompt based generation.

The Metadata

All images and videos were run through AI or Not, even after being confirmed by AI Blocker. Metadata are the details platforms do not show to users, but they persist underneath the surface of the image or video generated by LLMs. Many files tend to carry this information in the form of encoder tags, timestamps, and watermarks. AI or Not exhibited inconsistent encoder tags, abnormal render timestamps, and processing patterns associated with generative AI rather than real content shot on a camera.

The Network: Stables of Ghosts

These accounts are not the passion projects of long-digital artists. They are the output of "factories" run by individuals like tiger.b.n and artem_kochkonian.

These operations do not just run one page; they manage a stable of synthetic assets. For instance, tiger.b.n is behind at least two major personas, including Tasha_belyasha. artem_kochkonian manages the network, including Arthur is Black, using these faces as a front to funnel users into Telegram groups, where they sell courses on, you guessed it, how to build your own AI models.

The Funnel: From “Free” to Fanvue

The goal of this content is not organic migration. Instagram serves as the top of the funnel. Users are then directed to a 'Link in bio' leading to Fanvue. Unlike OnlyFans, which has tightened its ID verifications and creator requirements, Fanvue has positioned itself as the AI friendly alternative. With lower commission rates than OnlyFans' 20%, it is the preferred destination for synthetic factories looking to maximize margins.

But the real meta scam happens on Telegram. Operators like artem_kochkonian use accounts like Arthur is Black to funnel people into groups where they do not just sell pictures they sell courses. For a few hundred dollars, they will teach you how to set up your own influencer factory, perpetuating the cycle of platform degradation.

Why This Matters

This is neither art nor innovation. It is the synthetic replacement of human connection with automated extraction. It devalues real creators who cannot compete with the 24/7 output of a GPU and turns our social spaces into a hall of mirrors where nothing is quite what it seems. The platforms will not stop this, because engagement from a bot looks the same as engagement from a human in a quarterly report. It is up to us to look for the seams.