How to Tell if ChatGPT Wrote Something

Have a feeling that someone used ChatGPT to write? Here's what to look for to detect ChatGPT writing.

How to Tell if ChatGPT Wrote Something

You get an email or stumble upon an article online and a couple sentences in, you think something is not quite right. You can’t put your finger on it but there are some weird sentences, which are grammatically fine, but seem a little off.

Then it hits you: ChatGPT could've written this?!

Given the astronomical rise of this chatbot, it wouldn't be shocking. But how can you really tell if a piece is written by an AI tool? Aside from using a ChatGPT detector, there are actually telltale signs to know if ChatGPT wrote the article you're reading.

Top 10 Signs to Detect ChatGPT Text (Plus a Bonus One!)

Who is better to ask for signs, or "tells", that ChatGPT wrote something than ChatGPT itself! If a ChatGPT detector is not at your disposal, we've summarized a list of what to look for when identifying ChatGPT writing.

1. The article restates your questions or gives you basic definitions.

Due to its Large Language Model (LLM) training, AI replies often kick off by repeating your prompt. Why? Because it practically builds its answers around the context of the menial words you gave it. Consider it an intern mirroring the user's wording in the reply to get a pat on the back.

Aside from this, it also excessively gives you common terminologies before diving into the topic. This is mostly because it's trying to hit the keywords first on its knowledge bank to activate its capacity to expound on the subject contextually.

Sample Question:

What is blockchain?

Sample AI Answer:

"Blockchain is a decentralized digital ledger that records transactions across a network of computers. So, when you ask, ‘What is blockchain?’ it refers to a system that securely stores data in blocks linked together chronologically."

2. ChatGPT loves lists, frameworks, and headings.

ChatGPT is the king of enumeration and frameworks. For a paragraph content, it always gives you three parallel items. Expect neat bullet points, numbered checklists, or tidy section headers... see what we did there?

You may ask why? The AI tool attempts to organize the data from its knowledge bank. And at times, it also attempts to organize the thoughts of its users, too.

Sample Question:

How can I improve my sleep?

Sample AI Answer:

"There are several ways you can improve your sleep quality:

Set a consistent bedtime and wake-up schedule.

Limit screen time an hour before bed.

Create a dark, quiet, and cool sleep environment."

3. The language sounds too careful for a human.

AI tools are being regulated globally. Scary for AI companies! Some LLMs, including ChatGPT, are being trained to create a "grey area" of truth in all its claims. Thus, careful hedges like "generally", "it depends", and careful numerics like "about 10-15%" are always used.

Sample Question:

Is coffee good for your health?

Sample AI Answer:

"Coffee is generally considered safe for most adults and may even offer some health benefits, such as improved focus and antioxidants."

You can never be too safe... even for the simple subject of coffee.

4. The opinions are balanced and bland.

In line with point number 3, ChatGPT detectors have identified that the tool consistently favors the safe middle ground when sharing opinions. It always offers caveats, pros & cons, and just doesn't take a specific side of the ring.

A solid stance is definitely one thing AI can't emulate from humans... yet.

Sample Question:

Is remote work better than working in the office?

Sample AI Answer:

"Remote work offers benefits like flexibility, reduced commuting, and better work-life balance. On the other hand, office work can provide clearer collaboration, stronger team culture, and easier access to resources.

5. It's too politely impersonal.

Despite its large knowledge bank, ChatGPT still can't emulate humans' anecdotes, edgy humor, or relatable slang. In lieu of those, it uses imperative phrasing like "we can..." or "consider...", as if it returns the actions or thinking process to its user.

Actually, try this:

Sample Question:

Can you be sarcastic about working in the office?

Sample AI Answer:

"Sure, because everyone definitely misses crowded commutes and fluorescent office lighting." It started off strong... then it fumbled when it became too technical about the office lights.

6. Overt predictability.

AI detectors often rely on the over-predictability of ChatGPT syntax when checking. Lines such as "In short" or "Put simply" are its vague attempts to close dangling questions and appear human.

Sample Question:

Why is exercise important?

Sample AI Answer:

"Exercise improves cardiovascular health, strengthens muscles, and boosts mental well-being. In short, it’s one of the best ways to support your overall health."

7. Too cautious about specific details.

Specificity is a gift ChatGPT is yet to receive. After all, it can't write particular dates, niche brand opinions, and bold claims without sources. Thus, it often reverts to general, even agnostic, insights.

Sample Question:

When will AI replace most jobs?

Sample AI Answer:

"It’s difficult to predict an exact date or year. Generally, experts suggest that automation and AI could affect certain industries over the next decade or two, but the timeline will depend on factors like government policy, technology adoption rates, and ethical considerations."

8. Goes hard on the basics.

In its attempt to sound like an expert without going too specific, it overly explains definitions, background, and step-by-step procedures.

In fact, try asking it about its opinion on the internet!

Sample Question:

What is your opinion about the internet?

Sample AI Answer:

"The internet is a vast global network that connects people, businesses, and institutions through digital communication. It has transformed how we access information, enabling instant sharing of knowledge, entertainment, and services."

... so what's your opinion then, Sir?

9. Randomly becomes ethical.

It's almost comical how ChatGPT randomly becomes ethical in its answers just to avoid potential lawsuits. It inserts phrases like "not a medical advice" or suggests legal considerations and data privacy notes; at times, even without context.

Sample Question:

How many cups of coffee is safe to drink in a day?

Sample AI Answer:

"Most research suggests that about 3–4 cups per day is generally safe for healthy adults. This is not medical advice, and it’s best to consult a healthcare professional for guidance specific to your situation."

10. Closer to a textbook than to a human.

AI checkers often look at the parallelism of the article. If it's too consistent in its phrasing, punctuation, and even analogies, chances are, ChatGPT wrote them. But it doesn't mean that parallelism should be thrown off the bridge; just don't be too rhythmic about it.

Oh, and that em-dash is the best telltale sign of the mighty ChatGPT.

Sample Question:

Why is teamwork important in the workplace?

Sample AI Answer:

"Teamwork improves communication, teamwork boosts efficiency, and teamwork strengthens relationships. In short, teamwork creates a stronger, more productive workplace — and it benefits everyone involved."

BONUS SIGN: Check your KPIs!

Since ChatGPT is mostly used in businesses (72% of businesses now use AI), it's funny how ChatGPT almost automatically mentions KPIs when the conversation is going a bit too technical. Slow down, Sir, this is not a client presentation!

Sample Question:

How can I get better at running?

Sample AI Answer:

"Improving your running involves consistency, proper form, and gradual progress. One way to track your improvement is by setting personal Key Performance Indicators (KPIs), such as average pace, distance covered per week, or recovery time."

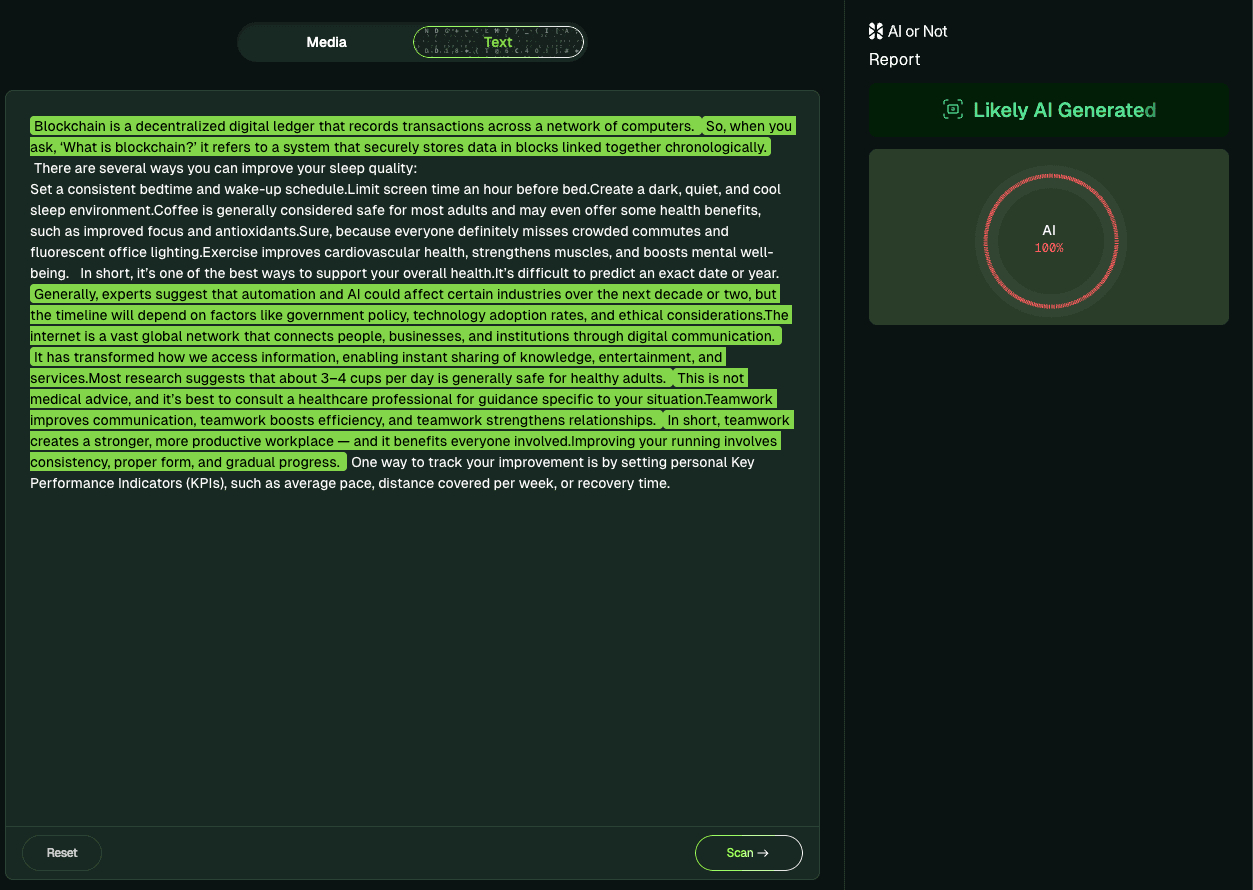

Our ChatGPT Detector Covers This... And More.

Are you a human AI checker now? If you said YES, then good for you!

But if your answer is NO, then you may need a free AI checker.

Truth is, you're going to face literally hundreds of other LLMs around the world aside from ChatGPT. AI or Not has trained its AI detectors to rummage through millions of samples of written texts to understand what makes a text, AI or not.

Don't worry, it's free and it doesn't require any KPI. See what we did there?

Frequently Asked Questions

What is a ChatGPT detector?

It's a tool that allows users to check if a text is written by AI or not.

Why do I need a ChatGPT detector?

There are still no strict regulations yet when it comes to ChatGPT use. That said, the information bank of the tool, alongside other LLMs, is largely unchecked and unsupervised. It's prone to mentioning erroneous and outdated data.

What is the most reliable ChatGPT detector?

AI or Not is a free ChatGPT detector that has been trained to scour through multiple LLMs to detect AI texts. It's a great tool to start your no-AI journey!